A Digital Procurement Matchmaker for Vendors & Clients Alike with Amazon Bedrock

About the Customer

Tender responses, and submission reviews are the necessary but unsexy reality of vendor-client relationships at every level of the enterprise ecosystem. Whether your team sits on the vendor side, reviewing and responding to dozens if not hundreds of opportunities within pressurised timeframes; requiring contextual responses in compliance to tender criteria, and input from across every faculty of a respondent’s organisation. The issuer side requires review and assessment of mountains of tender submissions for suitability and compliance. The pressure is significant, and the margin for human error is huge. Our Client, an up and coming Project Management firm servicing the Public Sector, presented us with an opportunity to help them solve this problem.

Customer Challenge

In the constantly evolving landscape of procurement, modern businesses are increasingly seeking ways to streamline lengthy and tedious tender and contract development processes. For vendors, tender submission preparation is a significant investment of time, resources and expertise across all functions of the business. Staff responding to tender RFPs and RFQs generally spend many days, weeks and months responding to or evaluating these documents, and need to make sure that no mistakes are made in extracting information, which takes time. And it’s a task to which nobody is immune. You can run, but you can’t hide when the tender team come knocking for input! For organisations fortunate to have high volumes of opportunities to respond to within strict timeframes, it is essential that business development teams can swiftly review and assess the eligibility and compliance criteria to ensure suitability before committing resources to a submission.

Likewise, for procurement teams receiving high volumes of lengthy submissions, the ability to summarise and assess key selection criteria can create enormous efficiencies and accuracy in the vendor selection process.

Our task was to develop artificial intelligence-based software that could offer a revolutionary new approach to tender and contract development. By automating and streamlining common procurement processes, companies can save time and effort while also increasing their chances of winning valuable contracts.

Risk and impact if not addressed

Tenders are large complex documents that contain rich information outlining terms and conditions that companies hopeful to respond need to comply with. These constraints need to be addressed in sufficient detail in the tender response documents. Failing to address these conditions typically lead to delays and more often disqualification during the tender evaluation process.

There are significant financial implications in non compliance with the requirements.

Key Metrics for Success

The metric used to measure the outcome of the project lies in:

- How much elapsed time it takes to derive factoids from tender documents. The elapsed time reduction is proportional to the reduced toil required by skilled staff deriving and interpreting core facts (e.g. insurance requirements) from the tender documents.

- The completeness of the requirements derived from tender documents. This measures core requirements missed by the reader as compared to the results of the LLM evaluating the tender.

OptiSol’s Solution

To solve these challenges and ease friction on both sides of the vendor-client landscape, OptiSol developed AI-based software (LLM) that can analyse and understand contract terms and conditions without human intervention. The software uses natural language processing (NLP) and machine learning (ML) algorithms to read, comprehend and review every contract term to identify potential contract obligations.

One of the best features of the software is our Document Summarisation function which can be used for tender assessments to simplify the process of sifting through large volumes of documentation. We used an LLM to distill core details from procurement and supply chain documents into structured information for operations staff to use. With this feature, AI software can automatically identify and summarise key information in tender documents to provide a clearer picture to procurement professionals. Operations staff are also able to ask the LLM questions relating to documents and continue to refine questions based on answers received.

Furthermore, our LLM has the ability to extract critical contract obligations, which is a task that would have once been performed manually. From these obligations, the software can assist in creating content that matches the company profile to the tender requirements.

Finally, the AI-based tender software partially automates the pricing estimation process. Using risk-adjusted cost estimation techniques, the software calculates pricing estimates that are based on historical data, business rules, and other relevant factors.

Architecture

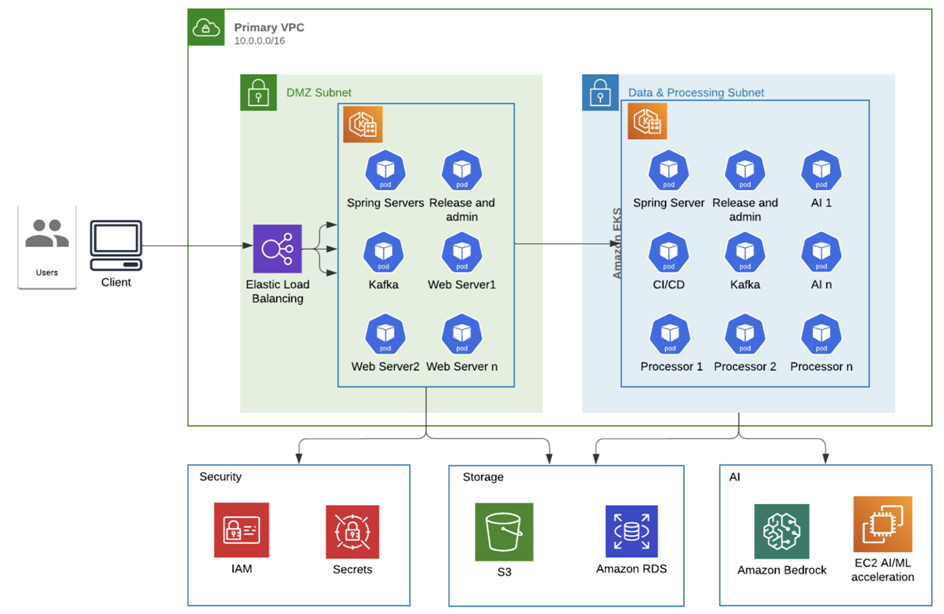

To realise the full potential for our Clients end-users, the solution architecture combines multiple services and tools from AWS, with Bedrock at the heart of the solution.

Kubernetes is an ideal platform for this solution, as it provides robust methods for redundancy and self-healing, which are needed for maintaining high availability and reliability. The architecture is designed for traffic to automatically be distributed across multiple containers. Any containers that fail will automatically be recreated, maintaining seamless operation that is capable of handling the varying loads. The use of containerisation simplifies scaling and software updates, allowing for automation through scripting. These features collectively make the maintenance and administration far more simple.

Process and Services across the Solution

- The client browser request is routed to the AWS ELB, where the request is routed to one of the containers serving the website within the DMZ Subnet.

- Two Kubernetes nodes are deployed across two different subnets, one in a DMZ and another in a completely closed off and private subnet, inaccessible from outside. The Kubernetes nodes run specific services (pods), each with a dedicated function.

- The user is able to upload tender documents to the website. The website stores the documents into the S3 bucket.

- Kafka is used to ingest additional documents for admin purposes. These documents are stored in S3.

- The web server accesses Amazon IAM to authorise admin users. General users are authenticated using LinkedIn, leveraging OAuth 2.0 to securely manage the authentication process.

- The application uses Amazon Secrets Manager to securely manage and retrieve sensitive information for API keys, RDBMS credentials, and general configuration secrets.

- Once the web server has established the user identity and also the rights (authority) of the user, the request is forwarded to an application server worker pod in the secure Kubernetes node (the processor).

- The Processor applications implement business logic and uses Retrieval Augmented Generation (LangChain) to access data from the RDBMS and to orchestrate and manage interactions with Bedrock. Through the combination of the two technologies, the application servers are able to optimise the execution of both extracting the core facts from the uploaded tenders, as well as summarising these facts. The application relies on the NLP capability that Bedrock provides to perform contextual analysis on the content of documents. This combination of technology provides high accuracy in transforming the different writing styles across diverse tenders to consistent content structure.

- The AI pods are narrow AI models that are used to validate various documents received from clients. The objective is both to create metadata on the structure and content of the data as well as to establish the data quality. The AI algorithms make use of the DAMA framework for determining the data quality of all data received.

- The AI worker pods make use of GPU acceleration to run the various AI algorithms. This process uses RPC style calls to GPU accelerated VMs.

- Responses from Bedrock are framed into downloadable documents that are stored in S3 and are available for users to download.

Storage and Process

The solution uses S3 to store documents received from clients. These documents are parsed using Bedrock to extract factoids. The solution uses an SQL database to store the factoid that is then sent to Bedrock to construct the templates and language around the factoids to be summarised.

The solution uses S3 to store documents received from clients. These documents are parsed using Bedrock to extract factoids. The solution uses an SQL database to store the factoid that is then sent to Bedrock to construct the templates and language around the factoids to be summarised.

External libraries

As per the architecture, the solution uses LangChain to provide the framework for the application to implement the document extraction. Bedrock and LangChain allowed the solution to implement a more sophisticated approach to generate natural language around the factoids extracted from the source documents.

As per the architecture, the solution uses LangChain to provide the framework for the application to implement the document extraction. Bedrock and LangChain allowed the solution to implement a more sophisticated approach to generate natural language around the factoids extracted from the source documents.

The solution implements complex chains of operations that involve multiple model invocations. LangChain allows us to sequence tasks where the output of one model is passed as input to a subsequent model within a set process. LangChain provides the ability to manage the interaction between these models and Bedrock with managing the context of running processes.

Devops

Kubernetes simplifies the task of performing DevOps. It contributes to automating the deployment, scaling, and management of the containers, central to the architecture. Using Docker containers provides us the ability to perform green/blue deployment (using CI/CD pipelines). Code changes are automatically tested, built, and deployed across the environment with minimal manual intervention. The solution uses GitHub and GitHub actions to perform the CI pipelines. We make use of Helm charts and ArgoCD for deployment automation (CD).

Kubernetes simplifies the task of performing DevOps. It contributes to automating the deployment, scaling, and management of the containers, central to the architecture. Using Docker containers provides us the ability to perform green/blue deployment (using CI/CD pipelines). Code changes are automatically tested, built, and deployed across the environment with minimal manual intervention. The solution uses GitHub and GitHub actions to perform the CI pipelines. We make use of Helm charts and ArgoCD for deployment automation (CD).

Scaling

As the workloads are deployed in pods across two independent Kubernetes nodes, the solution is scaled either manually through updates to the Helm charts (Kubernetes scripting) or through an automated service. Kubernetes autoscaling is used to automatically increase or decrease the number of nodes, or adjust pod resources, in response to demand.

As the workloads are deployed in pods across two independent Kubernetes nodes, the solution is scaled either manually through updates to the Helm charts (Kubernetes scripting) or through an automated service. Kubernetes autoscaling is used to automatically increase or decrease the number of nodes, or adjust pod resources, in response to demand.

High Availability

We achieve high availability in our Kubernetes deployment by leveraging its inherent features such as automated scaling, load balancing, and self-healing. Our applications are deployed across multiple pods. This distribution ensures that even if a pod fails, the application remains accessible as other pods continue to serve the requests. Kubernetes’ is configured to maintain a specified number of running instances of each pod, automatically replacing any failed pods to ensure continuous availability.

We achieve high availability in our Kubernetes deployment by leveraging its inherent features such as automated scaling, load balancing, and self-healing. Our applications are deployed across multiple pods. This distribution ensures that even if a pod fails, the application remains accessible as other pods continue to serve the requests. Kubernetes’ is configured to maintain a specified number of running instances of each pod, automatically replacing any failed pods to ensure continuous availability.

This solution only runs on one VPC, in one AZ. Although it is important to have the solution highly available, the client felt it not worth the additional cost of operating a redundant installation in a separate AZ. This is mitigated by the use of containerisation as well as the high uptime that AWS has historically demonstrated.

VPC and Subnets

The solution uses subnets within a single Amazon VPC to logically segment the application’s network, enhancing security and network management. By dividing the VPC into multiple subnets, we control the flow of traffic between resources.

The solution uses subnets within a single Amazon VPC to logically segment the application’s network, enhancing security and network management. By dividing the VPC into multiple subnets, we control the flow of traffic between resources.

Bedrock

The solution uses a Bedrock managed service making the integration of Llama 2 more simple.This reduces the project dependency on highly experienced and highly paid LLM skills. Bedrock further simplifies scaling and maintenance whilst providing high availability for the application. Managing and deploying AI models is also simplified, reducing the cost of maintenance and operations.

The solution uses a Bedrock managed service making the integration of Llama 2 more simple.This reduces the project dependency on highly experienced and highly paid LLM skills. Bedrock further simplifies scaling and maintenance whilst providing high availability for the application. Managing and deploying AI models is also simplified, reducing the cost of maintenance and operations.

Business impact

Upon initiation of the project, it was determined that success would be measured by:

- A reduction in elapsed time / toil required to derive factoids from tender documents, and

- The completeness of the requirements derived from tender documents (reviewer vs LLM).

This transformation digitises the tendering and contracting process, increasing accuracy and decreasing time reviewing or preparing tender documentation by 15% allowing companies to spend less time on administration and instead focus on winning the best contracts and signing the best vendors for the job.

The average tender would require 12 effort days for the preparer, and 20 effort days for the reviewer. A 15% reduction in elapsed time (toil) amounts to a reduction in effort by 1.8 days for the preparer and 3 days for the reviewer, amounting $851 and $1,418 respectively. On an average our Clients end-users are preparing 25 tenders and reviewing 50 tenders per annum. The annualised savings for both parties amounts to $25,527 (ROI of 70%) and $70,909 (ROI of 184%).

Furthermore, over the course of 12 months post deployment, the completeness score (i.e. core requirements derived from the tender) of the LLM vs manual reviews, increased from 5.7 vs 8.3 (out of 10) to 9.4 vs 8.3. This required continuous enhancement of the solution, underpinned by a strong collaboration between both our teams (OptiSol and our Client).

By saving time and reducing the potential for human error that often accompanies the task of manual document review, it allows companies to make more informed decisions when selecting vendors for their projects while also allowing vendors to quickly assess suitability for a contract before committing time and resources to a tender response. Furthermore, the content creation function ensures that the vendor’s unique value proposition is highlighted in the tender, which can give businesses a significant competitive advantage.

In terms of reducing labour wastage, human error and increasing the likelihood of successful vendor-client contracts, OptiSol’s solution is a win-win for procurement teams and vendors alike.

Conclusion

In today’s competitive procurement landscape, the need for efficiency and accuracy in tender and contract development processes is more critical than ever. Our collaboration with our Client to address this challenge highlights the transformative potential of leveraging GenAI to automate and streamline complex tasks.

The success of this initiative is being measured by the reduction in time per task, allowing organisations to prepare / respond to tenders with the agility and precision required in today’s fast-paced market. Together with our Client, we are paving the way for a more efficient and effective procurement process, ultimately contributing to better vendor-client relationships and greater business success.

Lessons learned

The technology in the Generative AI sector is advancing rapidly, and the tools engineers used to develop the solution became obsolete within three months of completion. There are also many startups competing in this field. The lesson learned was the importance of designing the architecture to be modular, enabling easy updates to tools, libraries, and services with minimal effort and disruption. This task is more challenging than expected, but it remains crucial.

What does our Client say about OptiSol

“Our Business Development Team is so excited about the latest addition to our procurement arsenal. OptiSol’s ‘Digital Matchmaker’ AI solution simplifies and automates much of the typically time-consuming task of tender and contract development, improving success and alignment across all our client relationships, and winning more contracts than ever before.”

Samuel Bakara, Director at SB Energy Australia