Modernising Incident Management and Compliance with GenAI with Amazon Bedrock

About the Customer

In the realm of Workplace Health & Safety (WH&S), organisations are mandated to investigate any notifiable high-risk incidents. This obligation is not only a regulatory requirement but also a critical component in ensuring workplace safety and compliance with industry standards. Effective systems for incident reporting and investigation are crucial, especially when subjected to spontaneous audits by external inspectors.

Our customer, Inertia Technology, a GenAI-enabled start-up, is developing an objective and intelligent platform for incident reporting and analysis. The goal is to reduce human emotional bias and reliance on highly experienced senior investigators to identify the root cause of incidents in the most accurate and efficient way possible.

Our customer, Inertia Technology, a GenAI-enabled start-up, is developing an objective and intelligent platform for incident reporting and analysis. The goal is to reduce human emotional bias and reliance on highly experienced senior investigators to identify the root cause of incidents in the most accurate and efficient way possible.

Customer Challenge

Incident reporting is particularly vital for maintaining adherence to standards such as ISO 45001 for safety, ISO 14001 for environmental management, and ISO 9001 for quality assurance. Each of these standards requires annual audits, where companies must demonstrate ongoing compliance through detailed evidence of their practices and processes.

An effective method employed in incident investigation and reporting is the “five whys” and ICAM (Incident Cause Analysis Method) technique. This approach aids investigators in pinpointing the root cause and contributing factors of an incident by methodically dissecting each level of cause and effect.

However, while powerful, the “five whys” and ICAM technique demands a significant level of expertise, as it hinges on the investigators’ ability to navigate the correct sequence of questions to unravel the underlying issues thoroughly.

Key Metrics for Success

The metric used to measure the outcome of the project lies in:

- How long it would take to deploy an investigator to an incident. This metric speaks to the availability of experienced investigators. The more efficient they are, the faster they can complete investigations before becoming available for subsequent assignments.

- How long it would take to complete an investigation from allocation of the investigator to the final report.

The Risk and impact if not addressed

Challenges arise when experienced investigators are not available within the critical timeframes prescribed for a serious incident investigation. Even with the most seasoned professionals, time pressure introduces human biases and emotions that can skew the process, potentially compromising the integrity and accuracy of the findings derived from the “five whys” technique.

Furthermore, evidence must be collected and analysed promptly to ensure it is relevant to the events being investigated. Delays can result in evidence losing its contextual value or becoming outdated due to changes in circumstances.

Unfortunately most organisations that employ staff for physical labour (e.g. Logistics, Manufacturing, Mining, Construction, etc.) often experience workplace incidents, requiring the availability of experienced investigators on site during operational hours.

Poor incident reporting and analysis, not only has a negative impact on employee welfare and business reputation, but can also significantly increase the cost of Workers Compensation Insurance.

For example, this ranges between 3-5% of total payroll costs ($9-15 million on a payroll cost of $300 million). However, this can rise up to 15% of total payroll cost ($45 million on a $300 million payroll) for organisations that have a poor LTIFR (Lost Time Injury Frequency Rate) compared to industry standards. As part of the policy due-diligence, Insurers undertake a detailed review of the Incident reporting and analysis process.

OptiSol’s Solution

Recognising these vulnerabilities, our team were compelled to innovate a solution that enhances both the efficiency and accuracy of the investigations process, empowering investigators at various levels of expertise to conduct thorough, unbiased investigations swiftly.

In addressing the complexities of the ‘five whys’ investigation technique, we’ve introduced an innovative Generative AI solution utilising a Large Language Model (LLM) to enhance the process of generating investigative questions. This system uses Amazon Bedrock and various other Amazon services to perform root cause analysis. Bedrock is used to generate more appropriate questions for investigators in response to previous answers it receives. Answers to previous questions allow Bedrock to generate subsequent questions to delve deeper into the incident details.

How does it work? Bedrock utilises a sophisticated LLM at its core, which processes data and tailors questions based on the responses to previous ones. This iterative process ensures that each question is contextually relevant and progressively focuses on narrowing down the exact root cause of an issue. By building on the information gathered from each response, Bedrock effectively guides investigators through the logical layers of inquiry inherent in the ‘five whys’ method. This AI-driven approach not only streamlines the investigative process but also significantly reduces the influence of human bias and error, leading to more accurate and reliable conclusions in less time.

Architecture

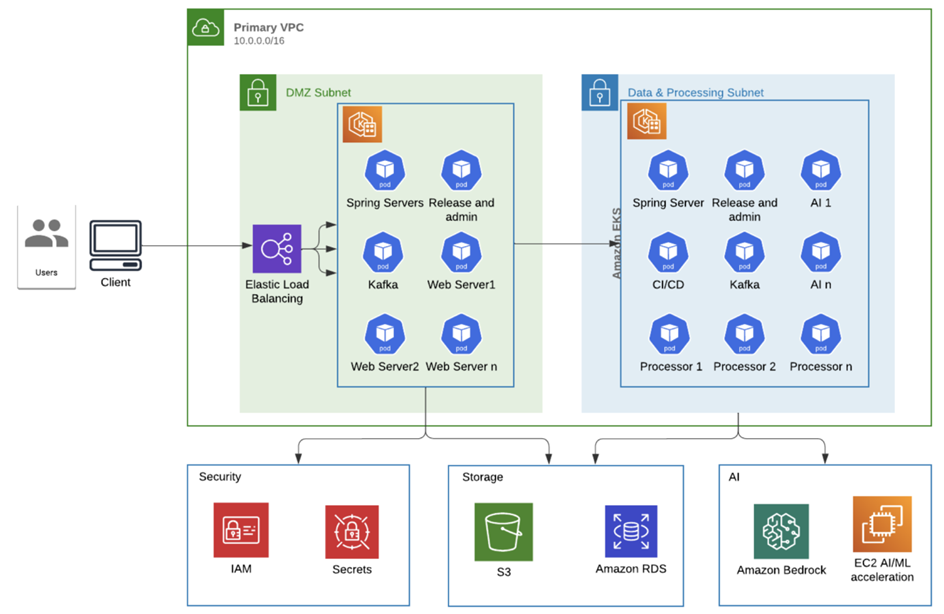

To realise the full potential of Inertia Technology clients, the solution architecture combines multiple services and tools from AWS, with Bedrock at the heart of the solution.

Kubernetes is an ideal platform for this solution, as it provides robust methods for redundancy and self-healing, which are needed for maintaining high availability and reliability. The architecture is designed for traffic to automatically be distributed across multiple containers. Any containers that fail will automatically be recreated, maintaining seamless operation that is capable of handling the varying loads. The use of containerisation simplifies scaling and software updates, allowing for automation through scripting. These features collectively make the maintenance and administration far more simple.

Process and Services across the Solution

- The client browser request is routed to the AWS ELB, where the request is routed to one of the containers serving the website within the DMZ Subnet.

- Two Kubernetes nodes are deployed across two different subnets, one in a DMZ and another in a completely closed off and private subnet, inaccessible from outside. The Kubernetes nodes run specific services (pods), each with a dedicated function.

- The user is able to upload compliance documents to the website. The website stores the documents into the S3 bucket.

- Kafka is used to ingest additional documents for admin purposes. These documents are stored in S3.

- The web server accesses Amazon IAM to authorise admin users. General users are authenticated using LinkedIn, leveraging OAuth 2.0 to securely manage the authentication process.

- The application uses Amazon Secrets Manager to securely manage and retrieve sensitive information for API keys, RDBMS credentials, and general configuration secrets.

- Once the web server has established the user identity and also the rights (authority) of the user, the request is forwarded to an application server worker pod in the secure Kubernetes node (the processor).

- The Processor applications implement business logic and uses Retrieval Augmented Generation (LangChain) to access data from the RDBMS and to orchestrate and manage interactions with Bedrock. Through the combination of the two technologies, the application servers are able to optimise the execution of both extracting the core facts from the uploaded documents as well as populating these facts into template documents. The application relies on the NLP capability that Bedrock provides to perform contextual analysis on the content of the documents. This combination of technology provides high accuracy in transforming the different writing styles across diverse documents to consistent content structure when populating documents templates.

- The AI pods are narrow AI models that are used to validate various data sets received from the client. The objective is both to create metadata on the structure and content of the data as well as to establish the data quality. The AI algorithms make use of the DAMA framework for determining the data quality of all data received.

- The AI worker pods make use of GPU acceleration to run the various AI algorithms. This process uses RPC style calls to GPU accelerated VMs.

- Responses from Bedrock are framed into downloadable documents that are stored in S3 and are available for users to download.

Storage and Process

The solution uses S3 to store documents received from clients. These documents are parsed using Bedrock to extract factoids. The solution uses an SQL database to store the factoid that is then sent to Bedrock to construct the templates and language around the factoids to be populated into template documents.

The solution uses S3 to store documents received from clients. These documents are parsed using Bedrock to extract factoids. The solution uses an SQL database to store the factoid that is then sent to Bedrock to construct the templates and language around the factoids to be populated into template documents.

External libraries

As per the architecture, the solution uses LangChain to provide the framework for the application to implement the document extraction and document builder workflows. Bedrock and LangChain allowed the solution to implement a more sophisticated approach to generate natural language around the factoids extracted from the source documents.

As per the architecture, the solution uses LangChain to provide the framework for the application to implement the document extraction and document builder workflows. Bedrock and LangChain allowed the solution to implement a more sophisticated approach to generate natural language around the factoids extracted from the source documents.

The solution implements complex chains of operations that involve multiple model invocations. LangChain allows us to sequence tasks where the output of one model is passed as input to a subsequent model within a set process. LangChain provides the ability to manage the interaction between these models and Bedrock with managing the context of running processes.

Devops

Kubernetes simplifies the task of performing DevOps. It contributes to automating the deployment, scaling, and management of the containers, central to the architecture. Using Docker containers provides us the ability to perform green/blue deployment (using CI/CD pipelines). Code changes are automatically tested, built, and deployed across the environment with minimal manual intervention. The solution uses GitHub and GitHub actions to perform the CI pipelines. We make use of Helm charts and ArgoCD for deployment automation (CD).

Kubernetes simplifies the task of performing DevOps. It contributes to automating the deployment, scaling, and management of the containers, central to the architecture. Using Docker containers provides us the ability to perform green/blue deployment (using CI/CD pipelines). Code changes are automatically tested, built, and deployed across the environment with minimal manual intervention. The solution uses GitHub and GitHub actions to perform the CI pipelines. We make use of Helm charts and ArgoCD for deployment automation (CD).

Scaling

As the workloads are deployed in pods across two independent Kubernetes nodes, the solution is scaled either manually through updates to the Helm charts (Kubernetes scripting) or through an automated service. Kubernetes autoscaling is used to automatically increase or decrease the number of nodes, or adjust pod resources, in response to demand.

As the workloads are deployed in pods across two independent Kubernetes nodes, the solution is scaled either manually through updates to the Helm charts (Kubernetes scripting) or through an automated service. Kubernetes autoscaling is used to automatically increase or decrease the number of nodes, or adjust pod resources, in response to demand.

High Availability

We achieve high availability in our Kubernetes deployment by leveraging its inherent features such as automated scaling, load balancing, and self-healing. Our applications are deployed across multiple pods. This distribution ensures that even if a pod fails, the application remains accessible as other pods continue to serve the requests. Kubernetes’ is configured to maintain a specified number of running instances of each pod, automatically replacing any failed pods to ensure continuous availability.

We achieve high availability in our Kubernetes deployment by leveraging its inherent features such as automated scaling, load balancing, and self-healing. Our applications are deployed across multiple pods. This distribution ensures that even if a pod fails, the application remains accessible as other pods continue to serve the requests. Kubernetes’ is configured to maintain a specified number of running instances of each pod, automatically replacing any failed pods to ensure continuous availability.

This solution only runs on one VPC, in one AZ. Although it is important to have the solution highly available, the client felt it not worth the additional cost of operating a redundant installation in a separate AZ. This is mitigated by the high uptime that AWS has historically demonstrated.

VPC and Subnets

The solution uses subnets within a single Amazon VPC to logically segment the application’s network, enhancing security and network management. By dividing the VPC into multiple subnets, we control the flow of traffic between resources.

The solution uses subnets within a single Amazon VPC to logically segment the application’s network, enhancing security and network management. By dividing the VPC into multiple subnets, we control the flow of traffic between resources.

Bedrock

The solution uses a Bedrock managed service making the integration of Llama 2 more simple.This reduces the project dependency on highly experienced and highly paid LLM skills. Bedrock further simplifies scaling and maintenance whilst providing high availability for the application. Managing and deploying AI models is also simplified, reducing the cost of maintenance and operations.

The solution uses a Bedrock managed service making the integration of Llama 2 more simple.This reduces the project dependency on highly experienced and highly paid LLM skills. Bedrock further simplifies scaling and maintenance whilst providing high availability for the application. Managing and deploying AI models is also simplified, reducing the cost of maintenance and operations.

Business impact

Upon initiation of the project, it was determined that success would be measured by:

- The availability of experienced investigators, due to a reduction in efforts required to undertake investigations, and

- How long it would take to complete an investigation from allocation of the investigator to the final report.

By integrating advanced analytical tools and decision-support systems, we have streamlined the investigative process to be concluded 20% faster than before. This allows less experienced personnel to participate in incident investigations with the same precision as veteran investigators, ensuring that critical decisions are based on solid, unbiased data and that they are reached promptly, aligning with stringent compliance timelines.

More importantly, the availability of experienced investigators within the business has increased from 5% to 80%. The direct impact is a reduction in LTIFR rates, as a streamlined and stringent investigation process, expedites the deployment of incident prevention measures, hence increasing employee safety and wellbeing.

Conclusion

Inertia Technology has set its vision on fundamentally transforming incident reporting using GenAI, by developing an objective and intelligent platform for incident reporting and analysis.

Our solution will allow Inertia Technology to achieve its goal of improving site safety and employee wellbeing, by streamlining the incident reporting and analysis process. Furthermore, it will allow businesses across Australia to focus on executing preventative measures based on the findings of an investigation, and prioritising site safety.

Lessons learned

The technology within the Generative AI sphere is changing rapidly and tools that the engineers used to implement the solution had been superseded within three months of completing the solution. The lesson was to ensure that the architecture is designed in a modular way so that later replacements of tools, libraries and services could be performed with minimal effort and impact. This task is more difficult than initially expected, but still requires serious consideration.

What does Pierre Mare, Managing Director & Founder of Inertia Technology, say about OptiSol

“When incidents and accidents happen on site, it’s vital for the safety of everyone that we get to the root cause straight away – not wait for complex, lengthy investigations led by experts with patchy availability and differing opinions and levels of expertise. Intertia’s Gen AI solution for incident investigation and reporting, we can get to the root cause faster, impartially and with more accuracy, keeping our team safe and keeping our records thorough and current.”

Pierre Mare, Managing Director & Founder of Inertia Technology